Information paper no. 01 | 2017 | Date updated: 2 September 2022

PBO information papers are published to help explain, in an accessible way, the underlying data, concepts, methodologies and processes that the PBO uses in preparing costings of policy proposals and budget analyses. The focus of PBO information papers is different from that of PBO research reports which are aimed at improving the understanding of budget and fiscal policy issues more broadly.

Introduction

Costings produced by the Parliamentary Budget Office (PBO) are estimates of the financial impact of policy proposals on the Commonwealth Government’s budget. They generally cover a future time period of between four and 10 years. Despite being the PBO’s best possible estimates of the budget impact of a policy, all costing estimates are subject to some degree of uncertainty about how closely they would correspond to actual outcomes, were the policy proposal implemented. The level of uncertainty will vary from costing to costing depending upon factors such as data quality, assumptions, methodology, the volatility of the costing base and the magnitude of the policy change.

The PBO is committed to providing transparency in relation to the factors that affect the reliability of any given costing. This information paper has been prepared to raise awareness of the factors affecting uncertainty in costings and how the PBO deals with them.

1 Why is uncertainty an issue in costings?

A key role of the PBO is to prepare costings of policy proposals for parliamentarians. The PBO’s estimates are prepared subject to the same rules and conventions as government budget estimates and are the PBO’s best possible estimates of the financial impact of a policy, given the information, time and resources available.

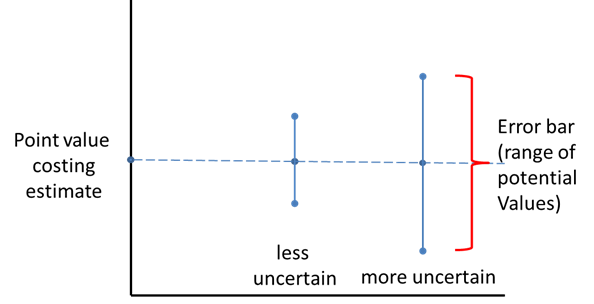

Notwithstanding this, there are a number of elements that introduce uncertainty into the costing process which mean that the point estimates in some costings would more likely represent the actual outcomes (were the policy implemented) than is the case for other policy costings (see Figure 1). Uncertainty is also a factor that leads to costings prepared at one time differing from costings of the same policy prepared at a later date.

Figure 1: Uncertainty in costings

Estimates are presented as point or central estimates in costings.

Uncertainty means that the actual outcome could be any of a range of values, with the resulting ‘error bar’ larger the more uncertain the estimate, as illustrated in the diagram above.

Uncertainty is something that affects all costings, regardless of who produces them. The issue of uncertainty in projections and costings has been recognised by the United States Congressional Budget Office which seeks to highlight the level of uncertainty in its estimates through qualitative statements.1 The United Kingdom’s Office of Budget Responsibility also recognises uncertainty in costings and has recently adopted a system of uncertainty ratings for each certified policy costing included in the United Kingdom Budget.2

The PBO includes commentary in its costing reports about the factors that may lead to the actual outcome from a policy proposal, should it be implemented, differing from the costing estimate. This commentary is intended to alert the user of the costing to factors that affect the level of uncertainty of the costing estimate.

The key factors that affect the uncertainty of costing estimates are discussed in Section 2 and the PBO’s approach to providing transparency on these factors is discussed in

Section 3.

2 Factors affecting the level of uncertainty of costings

The most important factors affecting the level of uncertainty of costings are:

- the quality of the data available to undertake the costing

- the number and soundness of any assumptions made in the costing analysis

- the volatility of the costing base

- the magnitude of the policy change.

2.1 Data quality

Data are the factual base from which the costing analysis starts. Data are used as the basis for describing the costing base and/or eligible population for a costing analysis. The data used in policy costings can come from a range of sources which can differ significantly in quality, where quality is measured in terms of how well the data represent the target population for a costing analysis. The lower the quality of the data, the more uncertain the costing becomes.

Data from sources such as unit record administrative data are highly reliable as they represent the actual outcome of programs or revenues for a particular period and in many cases can provide a high level of detail regarding the target population for a costing analysis.

The Australian Bureau of Statistics (ABS) produces a wide range of high quality data that are the product of rigorous statistical analysis. In many cases, these data may be derived from the high quality administrative sources described above. Other ABS data are derived from surveys and generally come with quantitative measures of the level of uncertainty in the data, and indicators against the more uncertain data values.

Less reliable data are those which may not provide precisely the information being sought, come from small sample surveys, or which originate from less rigorous statistical sources or those with an uncertain reputation.

Using older data introduces a further element of uncertainty into costings that becomes greater the longer the time between the data’s reference date (ie the period to which the data for the target population refers) and the period of the costing analysis. That is because the extrapolation of data from the reference period to the costing period introduces uncertainty related to both the projection parameters being used and changes in the target population over time.

In many cases, timely high quality data may not be available, with the result that a costing will have to be based on lower quality data sources, introducing a greater level of uncertainty into the costing estimates.

Figure 2 provides examples of how a range of data sources can be categorised in terms of their relative uncertainty.

Figure 2: Data quality and uncertainty

2.2 Assumptions

In addition to data, costing analyses always require a set of assumptions about matters such as the context for the policy, policy settings or behavioural responses. They are important elements of the costing ‘model’ that is developed. Unlike data which are the factual information on which the costing is based, assumptions are based on a combination of empirical evidence, theoretical conjecture and professional judgement.

Assumptions are used in costings in a number of roles:

-

to fill gaps in the information or data underlying the costing

-

to take account of unknown elements in the costing such as the behavioural responses of those affected by a proposal, or

-

as elements in the specification of the structure of the costing model.

The assumptions used in a costing may be explicit or implicit. Explicit assumptions are those where the analyst makes a deliberate choice in setting the value of the assumption, for example an adjustment may be made to account for a specific type and amount of behavioural change. Implicit assumptions are the (less obvious) assumptions embedded in aspects of the costing such as the structure of the model or the methodology used to produce the estimates, often relating to matters such as compliance.

The impact of assumptions on the reliability of a costing will depend upon factors such as how reasonable each assumption is, the range of potential values that an assumption could have, what impact variations in the assumption have on the costing outcome and the number of assumptions needed to produce a costing outcome.

-

The ‘reasonableness’ of an assumption can be tested by how acceptable it is to a reasonable person or by reference to supporting evidence such as academic studies, quantitative analysis or previous experience.

-

There may be a range of ‘reasonable’ values that could be used in an assumption. The wider this reasonable range is, the more uncertain the costing will be.

-

Variations in assumptions may impact on a costing estimate differently. The greater the impact that an assumption has on the costing outcome, the greater the uncertainty. The impact of assumptions can be determined by sensitivity analysis.

-

The number of assumptions made in a costing has an impact on the uncertainty of the results as does the way in which these assumptions interact. Generally, the more assumptions required to complete a costing, the more uncertain the result will be. This uncertainty will be further affected depending upon how assumptions interact, for instance, whether they cancel out, are additive or compounding in their effects.

Figure 3 summarises how assumptions can affect the reliability of a costing.

Implicit assumptions can impact on the uncertainty of a costing, often without their effect being obvious. Some commonly used implicit assumptions are:

-

Rationality - This assumption is that entities affected by a policy change will respond in a ‘rational’ manner. This most commonly involves assuming that those affected by a policy (consumers, taxpayers, companies, etc) will act in a manner that is in their best interests, such as maximising income or minimising taxes. This assumption may not always play out in reality as behaviour may be more complex, or those affected by policy may have different objectives.

-

Compliance - This assumption is that entities affected by a regulatory change will comply with the regulation that is imposed. Compliance behaviour may, however, depend upon other factors, such as the level of penalty for non-compliance and the resources allocated to enforcement.

-

Implementation - Often costings will assume that the policy proposal can be implemented. In practice, there may be a number of legal, technical or practical obstacles to implementing a policy that are assumed away in a costing.

Another assumption sometimes used in analysis is that of no behavioural change. Costings that employ this assumption only estimate the ‘static’ impact of a proposal as they do not take account of behavioural impacts. This assumption should be treated with caution as it implies that the transactions concerned are completely price inelastic. In the case of policies that impact on price sensitive transactions or which are intended to change behaviour, a ‘no behaviour change’ assumption could detract from the reliability of the costing.

Implicit assumptions may be difficult to identify because they are often built into the costing methodology and cannot be readily manipulated, giving rise to a risk that they are overlooked. Nonetheless, implicit assumptions can affect the reliability of a costing as much as any other costing assumption.

2.3 Volatility of the costing base

The ‘costing base’ refers to the most recent budget estimates for the program or item of revenue to which the costing relates. For example, to cost a proposal to change the taper rate of the Age Pension, the costing base would be the estimate for Age Pension payments contained in the most recent economic and fiscal outlook statement.

Volatility of the costing base affects the reliability with which that costing base can be grown over the projection period. In situations where a costing base shows predictable growth over the projection period, with little variation from year to year, costing projections will be able to be made with more confidence.

On the other hand, some costing bases show highly variable growth from year to year. These year to year variations make forecasting these bases extremely uncertain and significantly increase the uncertainty of any costings associated with policy variations to the costing bases concerned.

Figure 4: Uncertainty - volatile versus stable costing base

Even though both costing bases are based on the same underlying trend, projections of the stable costing base will be much less uncertain than projections of the volatile costing base.

Figure 4 illustrates how volatility affects the reliability with which costing bases can be projected. Both the ‘volatile’ and ‘stable’ time series are based on exactly the same underlying trend shown as the thin dark red line. Each series varies randomly from that trend, with the volatile series varying by much more than the stable series.

Each panel of the chart depicts 30 periods of data. The first 26 periods of ‘actual’ data are shown as a solid blue line and the ‘outcomes’ in the projection period are shown as a dashed blue line. Forecasts for the projection period are based, in each case, on the trend that is calculated from the actual data. This is shown as the solid red line. This figure illustrates that the estimated trend for a volatile series can vary significantly from the actual trend that is underlying the series, whereas the estimated trend for a stable series will always be much closer to the actual underlying trend.

Figure 4 shows that even before new policies are considered, the projections of a volatile cost base are likely to be much less representative of the underlying trend than the projections of a stable cost base, creating a significant degree of uncertainty about the costing baseline. It also shows that the projections for a volatile series are always much less likely to correspond with the outcomes in the projection period than for a stable series.

The volatility of the costing base is not a data quality issue. It is simply a reflection of how predictable the underlying costing base is. Volatility can arise because the costing base is affected by random events or because of underlying influences on that base such as economic conditions, market events or consumer sentiment.

For instance, costings affecting gross income tax revenue (ie personal income tax withheld from wages and salaries) for the whole population are more certain than costings affecting capital gains tax (CGT) revenue. This is because gross income tax revenue tends to be very stable from year to year, affected only at the margin by variations in nominal wages growth and employment growth. On the other hand, CGT revenue can vary substantially as asset prices rise or fall relative to the CGT cost base of assets and as taxpayers adjust the realisation rate of assets in response to asset price changes.

The reliability with which the costing base can be projected will generally decline the longer the forecasting horizon becomes because forecasting errors tend to compound over time.

2.4 The magnitude of the policy change

A further source of uncertainty arises from the scale of policy change, that is, how far a policy proposal departs from existing policy. The larger the scale of a policy change, the more uncertain the resulting costing is likely to be. This due to a number of factors:

-

Large policy proposals may impact on previously unaffected populations, increasing the uncertainty associated with behavioural responses as programs extend to populations whose responses have not been tested previously.

-

Information on responses to policy changes generally relate to small changes ‘at the margin’ rather than ‘significant changes’ in entitlements or liabilities. Large changes, therefore, increase the uncertainty associated with behavioural assumptions.

-

Data may not exist in relation to changes that extend entitlements or liabilities beyond the existing costing base. As a result, additional assumptions are needed in order to determine critical parameters such as the size of the population affected by a new policy. These details can substantially increases the uncertainty associated with the costing.

The transition and implementation details are also important elements of a costing specification that can affect uncertainty and this is particularly the case for large policy changes. These details can significantly impact on the actual costs through elements such as take up rates and compliance.

Table 1 provides examples of how the magnitude of policy change affects the uncertainty of a costing estimate.

Table 1: How the size of policy change affects uncertainty

| Category | Hypothetical example | Uncertainty generated |

|---|---|---|

| Policy change affects previously unaffected populations | New income tested tax offset for undertaking specified activities |

Data for the existing level of the specified activity may or may not exist. Income tax data for assessing the income test eligibility exists but the take up rates, both in relation to inducement to undertake the specified activity and the rate at which people make claims, are unknown. |

| Information on behavioural response exists at the margin but not for large changes | 50 per cent increases in tobacco excise |

Excise already accounts for a significant portion of the price of tobacco products, so the scheduled increases can be expected to significantly add to the price. The extent to which the excise increase is passed on to consumers may become more uncertain as higher prices reduce demand for the product. The price elasticity of products is typically estimated by looking at the impact of small increases in price. The response to a large increase in price is less certain, particularly as income effects may also occur. |

| Data may not exist for some changes |

Halve the pension assets test withdrawal rate |

The policy would extend eligibility for the part rate pensions to previously ineligible people. Pension administrative data is not available for the previously ineligible population, requiring impacts to be assessed by imputing or extrapolating data. Alternative data sources on the distribution of assets may be less reliable. |

| Large policy change with significant transitional uncertainty | Introduction of the goods and services tax (GST) | Transitional uncertainty occurred relating to price adjustments, GST accounting rules, implementation of point of sale technology, transition from the previous wholesale sales tax regime and compliance issues. Uncertainty arose regarding the demarcation between GST-free and taxable goods. These uncertainties affected costing estimates, particularly in relation to the timing and scale of collections. |

3 How the PBO takes account of uncertainty in costings

The PBO is committed to providing transparency in relation to the factors that affect the reliability of the costings it prepares. This means that PBO costings identify those factors that could be expected to make the actual costing outcome, were the policy proposal to be implemented, significantly different from the point estimate provided in the costing.

The PBO’s approach to identifying uncertainty in its costings is to include a qualitative statement in the costing overview that sets out the key factors that impact on uncertainty in the costing, drawing on the considerations outlined in sections 2.1 to 2.4 above. These qualitative statements highlight particular factors that could be expected to impact on the uncertainty of the costing, such as the quality and currency of the data used, the quality, number and impact of the assumptions made and the volatility of the underlying costing base. These statements may be further elaborated upon in the commentary on assumptions and methodology included in the costing minute.

The assessment of the level of uncertainty in a costing will also be reflected in the rounding of estimates. Estimates are rounded in order to restrict the costing estimate to providing meaningful information. As the level of uncertainty in a costing increases the number of significant figures is reduced.

Footnotes:

- See: ‘Communicating the Uncertainty of CBO's Estimates’, post by Doug Elmendorf, December 15, 2014 at http://www.cbo.gov/publication/49860.

- See: ‘Economic and fiscal outlook’, Office for Budget Responsibility, United Kingdom, March 2015. Page 201

- Household Income and Labour Dynamics in Australia survey, conducted by the Melbourne Institute.

- For example data in the ‘2015–16 Key industry figures’ table of ABS Cat. No. 8155.0 - Australian Industry, 2015–16, caveated: ‘^ estimate has a relative standard error of 10 per cent to less than 25 per cent and should be used with caution’ or ‘* estimate has a relative standard error of 25 per cent to 50 per cent and should be used with caution’.

- For example data in Table 2 of ABS Cat. No. 8155.0 - Australian Industry, 2015–16, is caveated: ‘estimate has a relative standard error of greater than 50 per cent and is considered too unreliable for general use’.